Ending the schism

No matter how weak was the reception of Mystique by reviewers and gamers, Matrox was selling a lot of cards in 1997. For anyone who wasn't primarily concerned with 3d quality, MGA chips had a lot to offer. But as the proliferation of more capable 3d accelerators continued and their market was booming, Matrox found themselves in the odd position of image quality concerns- at least among gamers. To release a new MGA-1 like chip in 1998 in the form of G100 was, shall we say, a bold move. If they had only that, the brand might have been dead for good when it comes to video games. But the G100 was immediately followed by G200. A wholly new architecture and look and behold, rarely were there twins as divergent as these two. This new chip would power new generation of both Mystique and Millennium cards.

The Card

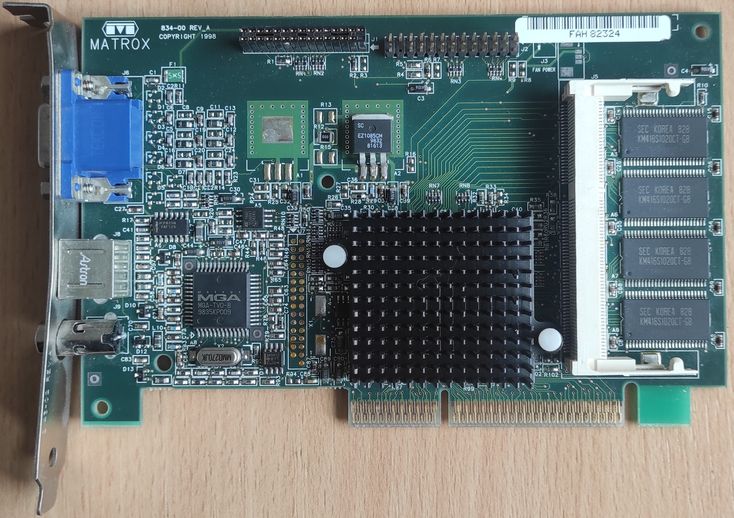

Looking at G200 cards they look innocent and hardly different from the previous generation. There is the chip with familiar MGA print, assuming it isn't hidden below the heatsink which was needed at least for the first batch. The chip is now made of 5 million transistors, a respectable number. The frequency of most of them is 84.4 MHz. The memory of usual latency is connected by a standard 64-bit bus, not screaming "high performance" at you. The capacity is 8 MB, an average amount for the time. Often there is a connector for the expansion module to double the capacity, but judging by how rare the module is in the wild, it was hardly ever used. Board with the module installed will lower their frequencies a bit, so use them only if you really need extra capacity.

The card reviewed is the gamers flavor of early G200 - Mystique G2+ for AGP.

The memory type is a regular SDRAM, with no more multiporting, despite the chip powering also new Millenium cards. And the brand is safe because the G200 cards will be the fastest because of its DualBus architecture. Inside the chip are two parallel 64-bit buses unlocking new heights of effective bandwidth and lower latencies. Should one bus execute read and the other write, the operation may proceed in the same cycle. Although the memory controller itself has casual 64-bit width, the architecture can keep it busier. At a typical 112.5 MHz, the local memory offers modest 900 MB/s bandwidth. It was the same for the Millenium G200, which carries SGRAM of the same clock. Another difference was 250 MHz Ramdac speed as opposed to 230 MHz of this Mystique.

The MGA Overhaul

The AGP connector has all the pins and indeed full 1.0 specification is supported. At least by G200A, for some reason, Matrox had also G200P for PCI. More importantly, the geometry data is then received by a triangle setup engine, the first one by Matrox. And they did it right on that first try. Parallel instruction execution independent from the rest of the pipeline yields over 1.5 million triangles in fans and backface culling is processed as well. Thus the CPU is freed from these tasks that had to be done for the MGA-1 family. This setup unit called WARP is so separate it has its own clock domain and yes, some tools can adjust it. Moving to the rendering, 32-bit precision is maintained throughout, and should the render target be of 16-bit colors, it is dithered to that only at the very end. Z-buffer also supports 32 bits. Texturing can be done with a true trilinear filter. By this time many vendors were touting the 11-level LOD for mipmaps and I am happy to report Matrox have it functioning properly. Finally, Matrox said goodbye to the archaic stipple-alpha methods and managed to cram in a proper alpha-blending unit supporting all the combinations used. We are all set for some high-quality 3d rendering (as of 1998).

Hardly any sign of dithering despite several overlapping transparent surfaces and 16-bit color depth.

Hardly any sign of dithering despite several overlapping transparent surfaces and 16-bit color depth.

The pipeline has all the features wanted in 1998 and can apply them to one pixel every cycle. Nothing more, nothing less. 84 million pixels every second. Not an earth-shattering number, but are they pretty?

Experience

The noise Matrox made about Vibrant Color Quality, the marketing name for high internal precision, is not baseless. I am not sure what is it exactly, maybe my eyes are too adjusted for abrasive images produced by older accelerators, or maybe it is the famous analog output quality of Matrox, but the colors are vibrant and pleasing. On the other hand, I am afraid there isn't one driver to rule them all.

This frame lends itself nicely to mipmapping comparisons. G200 shows many levels with proper selection.

This frame lends itself nicely to mipmapping comparisons. G200 shows many levels with proper selection.Check out G200 gallery.

Driver as recent as 6.83.017 has broken OpenGL. I would recommend 6.82.016, unless you are going to play also early 3d games, like those based on Mechwarrior 2 engine. For those and also Resident Evil, going as far back as 4.12.013 seems the best. Then again to play Shadows of the Empire with fog use driver 4.33.045. These old drivers do not yet have OpenGL ICD. Driver like 5.52.015 could be the middle of the road, but don't expect to play newer games like Shogo. And none of those drivers could render backgrounds of Daytona USA correctly. This driver situation isn't perfect, but by switching between those versions I got almost everything working properly. Thief gave me most headaches:

Best texturing I could get is this reduced color resolution

Best texturing I could get is this reduced color resolution

Performance

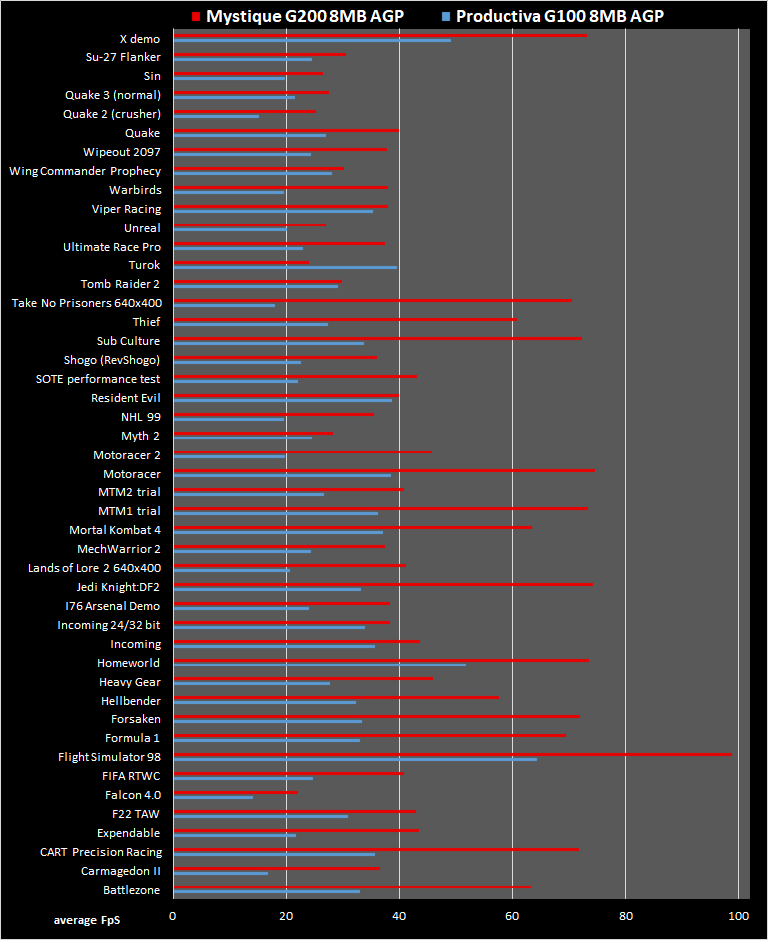

First of all, let's compare the G200 with the G100 equipped with almost the same memory. The improvements are clear, the new Mystique leads the Productiva by around 80 %. Well, average framerates are higher by 60 %, but vsync is most probably shrinking the difference.

As usual, click to see minimal framerates.

As usual, click to see minimal framerates.

So against any older chip of Matrox, the G200 wins in every regard by a huge difference. Considering how many other things had to be newly designed, the leap from MGA-1 to MGA-2 is immense. But how does it stand against newer competition?

The performance is barely above the integrated graphics of the i815 chipset equipped with AIMM module. Only in minimal framerates the Mystique leads by several percent. Without OpenGL titles (Quakes, Sin, Su-27, and also Half-Life is set to OpenGL) we could speak about double-digit gains, but Matrox did not get the ICD into a shape comparable with Direct3D, at least as far as games are of concern. So that is the kind of integrated graphics for which switching to this card would not help in terms of speed. When it comes to image quality though, G200 is an improvement. Keep in mind we are dealing with the lowest SKU of the family. The old Mystique was curiously sensitive to memory timings. I found no tool that would allow me to experiment with G200, but there is certain sensitivity toward memory type. When SGRAM is used, some small gains are expected thanks to, for example, fast z-buffer clears. For this chip, however, 32-bit performance is also improved beyond expectations. Just a quick illustration by Quake 3:

This also begs explanation. Maybe SGRAM is driven tighter than SDRAM. So early Millennium G200 is measurably faster 3d accelerator than Mystique. But I am saying early on purpose. Matrox entered 1999 with new chip revision D2, which is most probably a die shrink. It no longer needs a heatsink and allowed some cards to run at higher clocks. Memory clock was in that case increased proportionally and perhaps from this point, newer Milleniums also carried the basic SDRAM memory. Despite strict specifications in the beginning, Matrox managed to properly muddy the choice with many, many models.

Hope in hard times

The G200 ended the 3d image quality deficiencies of previous chips and established a new holistic quality standard for Matrox. No matter what it is displaying, G200 will generally render nicer images than competitors. On the other hand, the uninspiring speed of both the hardware and driver development hold back videogame-centric buyers. By the end of 1998, Matrox had half of the market share of the peak a year prior. The G200 is nonetheless the most successful graphics chip of Matrox, because it finally covered both game-centric, multi-display, and prosumer solutions, albeit with little difference. Considering how many ambitious chips there were, this is one extra shiny medal on the chest of the chip.

Despite being the slow one among new architectures it was very different from previous MGA chips because Matrox became for the first time 3d image quality leader. The shocker came the next year when G400 with all the traditional advantages of Matrox showed framerates on par with hyped TNT2 and Voodoo3. Devoted fans were waiting for the next big thing which would show GeForces and Radeons what Matrox can do, but waiting took too long. The following chips were about making G400 more cost-efficient. After some time Matrox attempted a comeback to the high-performance market with Parhelia in the summer of 2002. But the core was not very well balanced for games. It offered more geometry performance and color precision which is good for professional applications, however, games suffered from poor pixel fillrate. Parhelia appealed only to a small niche and Matrox left this arena to concentrate on graphics technologies for other uses. Somehow the company goes on with less than 1% percent market share, although their last chips were released in 2008 and now offers products powered by AMD.